Make ZeroGPU Spaces faster with PyTorch ahead-of-time (AoT) compilation

Sources: https://huggingface.co/blog/zerogpu-aoti, Hugging Face Blog

Overview

ZeroGPU lets anyone run powerful Nvidia H200 hardware in Hugging Face Spaces without dedicating GPUs to idle traffic. While it provides efficient, flexible demos, the full potential of the CUDA stack isn’t always utilized. PyTorch ahead-of-time (AoT) compilation addresses this by exporting a compiled model once and reloading it instantly in new processes, yielding snappier demos and smoother experiences. In practice, AoT delivers speedups around 1.3×–1.8× on models such as Flux.1-dev, Wan, and LTX when used inside ZeroGPU Spaces. AoT is especially valuable for ZeroGPU’s short-lived, just-in-time task model. Instead of compiling at every run (which can be costly on ZeroGPU since processes spin up for each GPU task), AoT pre-compiles and reuses the optimized graph. This makes it feasible to use high-performance TorchInductor tooling in a Space without incurring cold-start penalties. The post walks through wiring AoT into ZeroGPU Spaces, with advanced tricks such as FP8 quantization and dynamic shapes, and it provides working demos you can try immediately. ZeroGPU’s 3g.71gb MIG slice is currently supported (with 7g.141gb in a future rollout). The underlying approach keeps the main program memory-light and ensures the GPU is allocated only when needed.

Key features

- Export-then-reload AoT: Compile a model once and reuse the compiled program across processes and tasks, reducing startup overhead.

- Spaces integration: Use the spaces package with the GPU decorator to enable ZeroGPU’s just-in-time behavior and AoT workflow.

- AoT tooling: Leverage spaces.aoti_capture to capture example inputs, spaces.aoti_compile to generate an AoT-compiled binary, and spaces.aoti_apply to patch the pipeline safely without memory bloat.

- PyTorch internals: AoT builds on torch._inductor.aot_compile and relies on a compiled graph via a PyTorch ExportedProgram.

- Quantization: FP8 post-training dynamic quantization can be combined with AoT for additional speedups (requires CUDA compute capability 9.0+).

- Dynamic shapes: The export can be configured to treat certain inputs as dynamic shapes, enabling faster handling of varying image/video resolutions.

- Model-per-resolution option: For highly dynamic video generation (e.g., Wan) you can compile one model per resolution while sharing parameters.

- FA3 compatibility: Flash Attention 3 can be used inside AoT-compiled workflows to push performance further.

- Hardware specifics: ZeroGPU runs on H200s, with MIG slice options like 3g.71gb currently available and fuller slices planned for late 2025.

- Demonstrated speedups: A representative example shows ~1.7× improvement on Flux.1-dev in a ZeroGPU Space; general results cited include 1.3×–1.8× depending on model.

For deeper background, see the original article which also links to TorchAO and PyTorch AoTInductor tutorials.

Common use cases

- Live demos and showcases in Hugging Face Spaces where user activity is sparse and CPU/GPU idle time is costly.

- Image and video generation tasks (e.g., Flux and Wan family models) where inference latency is critical for interactive demos.

- Scenarios requiring fast startup of compiled models across multiple spaces or tasks, avoiding per-task compilation overhead.

- Workflow experiments that combine AoT with FP8 quantization and dynamic shape handling to maximize throughput on H200 hardware.

- Multi-resolution video generation where you dispatch the right precompiled model for a given output size while sharing parameters.

Setup & installation

The article describes how to enable AoT within ZeroGPU Spaces by leveraging the spaces package and PyTorch Inductor tooling. The workflow involves intercepting inputs, exporting the module to a PyTorch ExportedProgram, compiling it with AoT, and replacing the transformer in the pipeline with the compiled version via a safe patching utility. As described, a real GPU must be used during the compilation phase because AoT tuning relies on hardware-specific micro-benchmarks. Key steps (conceptual):

- Wrap the target computation inside a @spaces.GPU function to ensure proper device management and to enable the just-in-time lifecycle used by ZeroGPU.

- Use spaces.aoti_capture to intercept input arguments for the transformer and record representative inputs for export.

- Export the transformer (or the heavy component) to a PyTorch ExportedProgram using torch.export.export.

- Compile the exported program with spaces.aoti_compile, which wraps torch._inductor.aot_compile and handles saving/loading as needed.

- Patch the pipeline with spaces.aoti_apply to substitute the original transformer.forward with the compiled model and to clean old parameters from memory.

- Run inference within the same Spaces.GPU-wrapped function to ensure hardware compatibility and to benefit from the AoT-compiled graph. Note: The article emphasizes that exact commands are environment-specific and that a real GPU is required during compilation.

# Exact commands are not provided in the source. See the article for workflow.

# Example placeholders (not verbatim from source):

# 1. from spaces import GPU, aoti_capture, aoti_compile, aoti_apply

# 2. @GPU

# def run(pipe):

# with aoti_capture(pipe.transformer) as call:

# pass # intercept inputs

# compiled = aoti_compile(call)

# aoti_apply(pipe, compiled)

# # run inference hereQuick start (minimal runnable outline)

- Identify the heavy transformer component in your diffusion or generation pipeline (e.g., the denoiser/transformer block in Flux.1-dev).

- Wrap the demo code in a Spaces.GPU context to enable ZeroGPU’s just-in-time behavior.

- Capture inputs with aoti_capture, export with torch.export.export, compile with aoti_compile, and patch with aoti_apply.

- Run a small sample inference to verify speedups and ensure the compiled path is engaged. The article notes that the resulting compiled transformer yielded about 1.7× faster performance in a Flux.1-dev example and that FP8 quantization can add another ~1.2× speedup when appropriate.

Pros and cons

- Pros

- Dramatic reductions in startup/compilation latency for ZeroGPU Spaces.

- Clear separation between model export, compilation, and runtime execution.

- Ability to combine with FP8 quantization and dynamic shapes for additional gains.

- Compatibility with FA3 for additional speedups in generation models.

- Works with ZeroGPU’s on-demand, fork-based CUDA initialization to maximize resource efficiency.

- Cons

- Requires a real GPU at compile time due to hardware-dependent tuning.

- Some models may require multiple compiled variants (per resolution) for optimal results.

- Setup involves multiple moving parts (capture, export, compile, apply) that must be kept in sync across spaces instances.

Alternatives (brief comparisons)

| Approach | Pros | Cons |---|---|---| | AoT with spaces.aoti_* tooling | Fast reload across processes; avoids per-run compilation; supports dynamic shapes and FP8 | Requires a GPU during compilation; integration steps across a few APIs; model/component separation needed |PyTorch torch.compile (JIT) | Simpler path for standard environments; compiles on first run and caches results to filesystem | In ZeroGPU, each task spins up a new process, so reuse via filesystem cache is limited; cold-start penalties persist |Non-AoT, standard inference | Simple, no export/compile complexity | Substantial startup overhead on ZeroGPU; less efficient for sparse traffic patterns |

Pricing or License

Not specified in the source.

References

More resources

CUDA Toolkit 13.0 for Jetson Thor: Unified Arm Ecosystem and More

Unified CUDA toolkit for Arm on Jetson Thor with full memory coherence, multi-process GPU sharing, OpenRM/dmabuf interoperability, NUMA support, and better tooling across embedded and server-class targets.

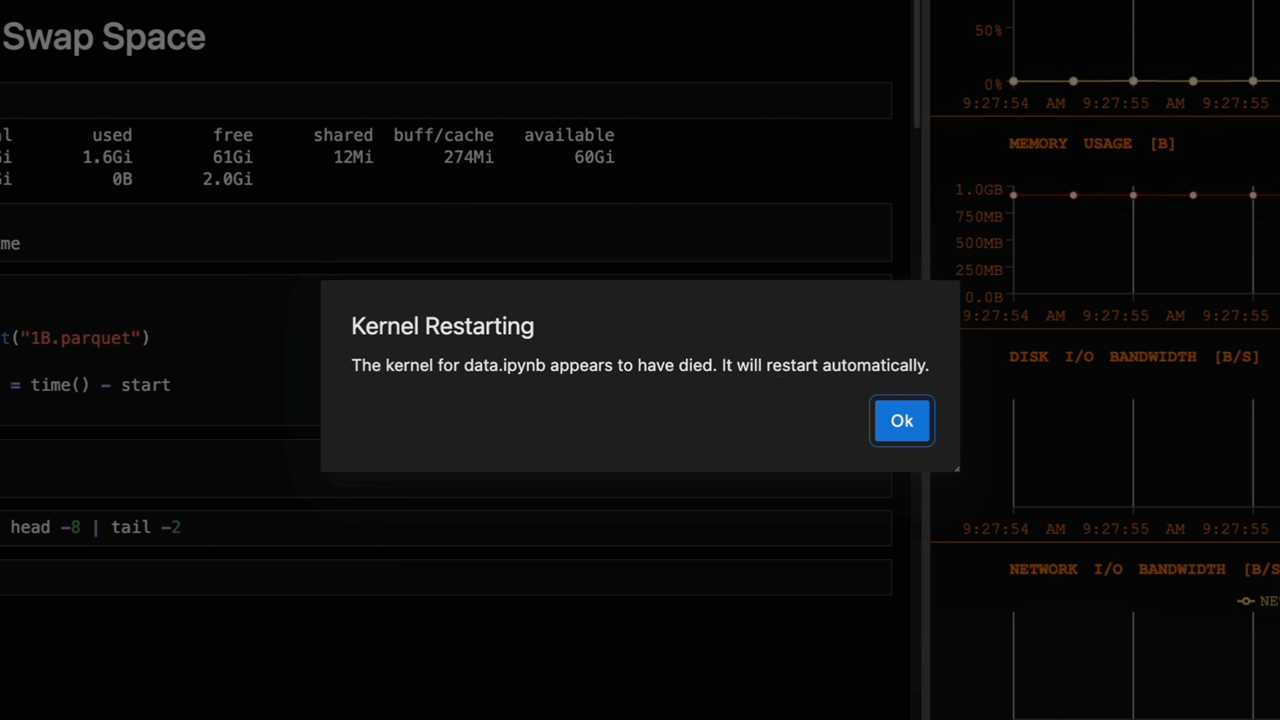

Cut Model Deployment Costs While Keeping Performance With GPU Memory Swap

Leverage GPU memory swap (model hot-swapping) to share GPUs across multiple LLMs, reduce idle GPU costs, and improve autoscaling while meeting SLAs.

Improving GEMM Kernel Auto-Tuning Efficiency with nvMatmulHeuristics in CUTLASS 4.2

Introduces nvMatmulHeuristics to quickly select a small set of high-potential GEMM kernel configurations for CUTLASS 4.2, drastically reducing auto-tuning time while approaching exhaustive-search performance.

Fine-Tuning gpt-oss for Accuracy and Performance with Quantization Aware Training

Guide to fine-tuning gpt-oss with SFT + QAT to recover FP4 accuracy while preserving efficiency, including upcasting to BF16, MXFP4, NVFP4, and deployment with TensorRT-LLM.

How to spot and fix 5 pandas bottlenecks with cudf.pandas (GPU acceleration)

A developer-focused resource outlining five common pandas bottlenecks, practical CPU and GPU fixes, and drop-in GPU acceleration with cudf.pandas for scalable data workflows.

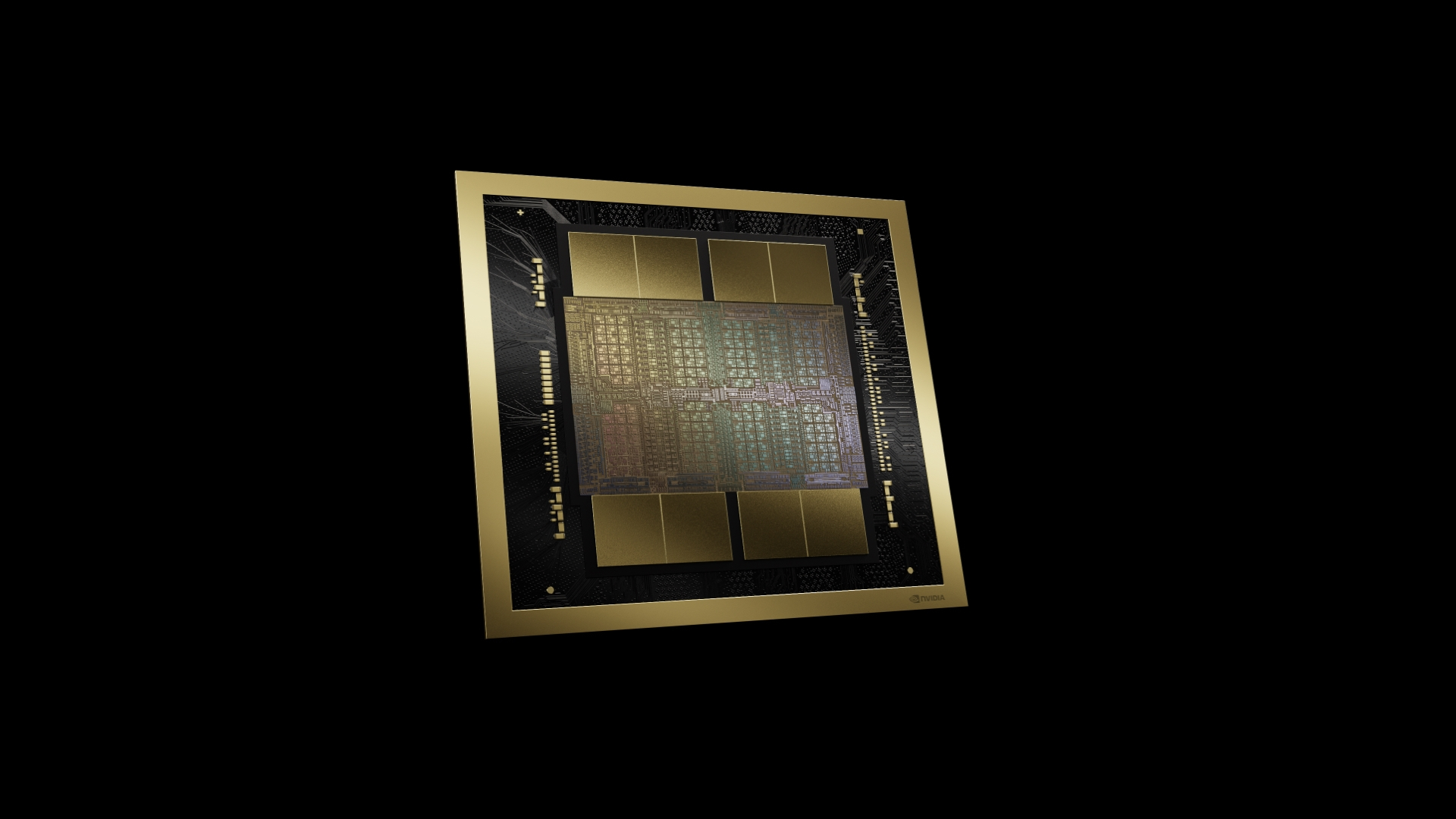

Inside NVIDIA Blackwell Ultra: The Chip Powering the AI Factory Era

An in‑depth profile of NVIDIA Blackwell Ultra, its dual‑die NV‑HBI design, NVFP4 precision, 288 GB HBM3e per GPU, and system‑level interconnects powering AI factories and large‑scale inference.