Inside NVIDIA Blackwell Ultra: The Chip Powering the AI Factory Era

Sources: https://developer.nvidia.com/blog/inside-nvidia-blackwell-ultra-the-chip-powering-the-ai-factory-era, https://developer.nvidia.com/blog/inside-nvidia-blackwell-ultra-the-chip-powering-the-ai-factory-era/, NVIDIA Dev Blog

Overview

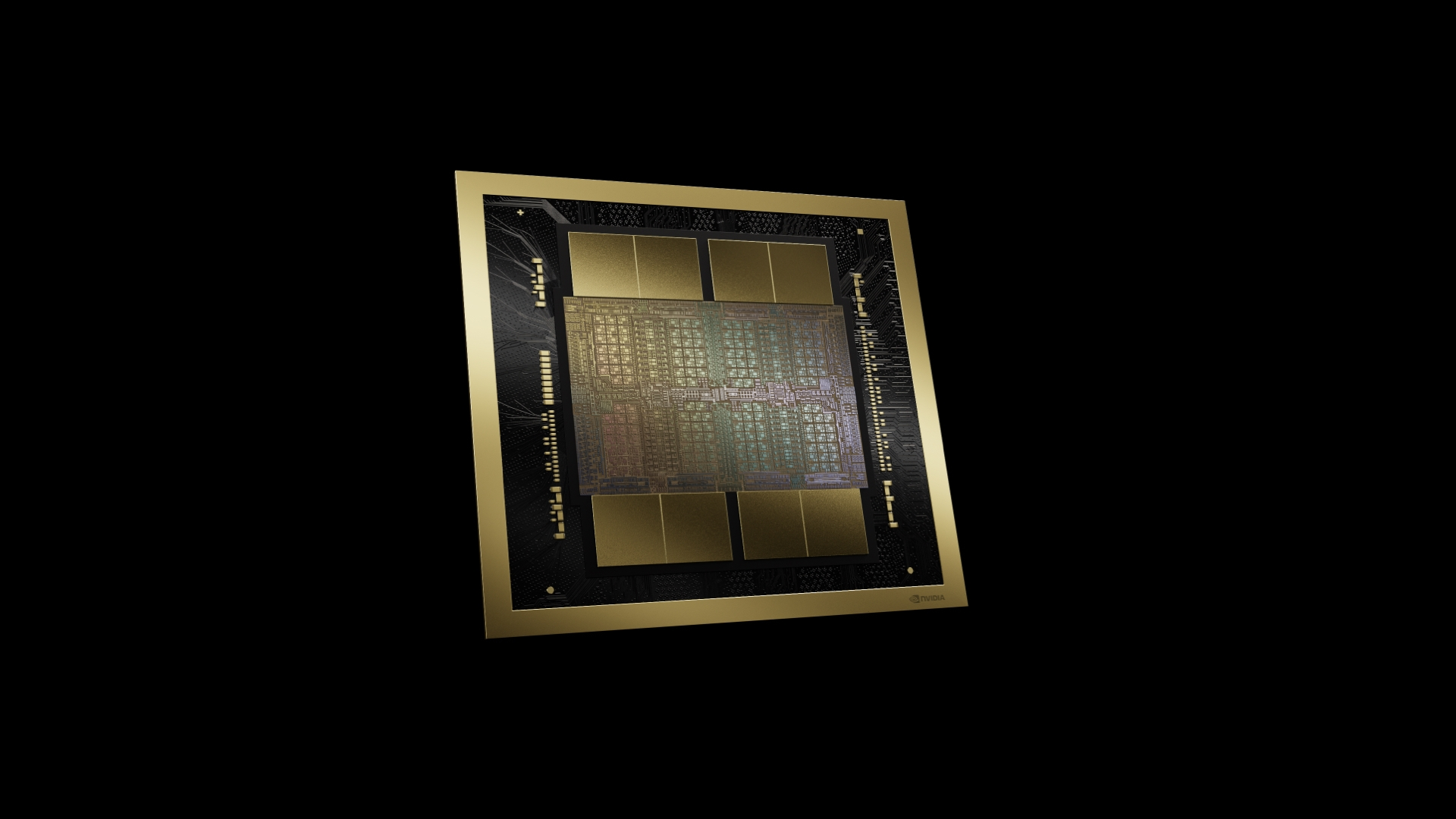

NVIDIA Blackwell Ultra represents the latest member of the Blackwell architecture family, designed to accelerate training and AI reasoning at scale. It fuses silicon innovations with higher levels of system integration to deliver performance, scalability, and efficiency for AI factories and the real‑time AI services they power. Energy efficiency is a key focus, with an dual‑reticle design, a high‑bandwidth, large‑capacity memory subsystem (HBM3e), fifth‑generation Tensor Cores, and a breakthrough NVFP4 4‑bit precision format. The result is a CUDA‑programmable accelerator that preserves the familiar CUDA programming model while delivering measurable gains for dense and sparse AI workloads. Blackwell Ultra is composed of two reticle‑sized dies connected by NVIDIA High‑Bandwidth Interface (NV‑HBI), a custom, power‑efficient die‑to‑die interconnect that provides 10 TB/s of bandwidth. Manufactured on the TSMC 4NP process, Blackwell Ultra contains 208 billion transistors—about 2.6× more than the NVIDIA Hopper GPU—yet it operates as a single CUDA‑programmed accelerator. The full implementation centers on 160 Streaming Multiprocessors (SMs) organized into eight Graphics Processing Clusters (GPCs). Each SM is a self‑contained compute engine hosting four Tensor Cores, yielding 640 Tensor Cores on the full chip that have been upgraded to handle the newest NVFP4 precision and the fifth‑generation Tensor Core capabilities. Each SM is paired with 256 KB of Tensor Memory (TMEM) designed to keep operands close to the compute units and reduce memory traffic. The platform also supports dual‑thread‑block MMA, enabling paired SMs to cooperate on a single MMA operation and share operands, which improves data locality and memory efficiency. NVFP4 is NVIDIA’s 4‑bit floating‑point format that combines an FP8 micro‑block scale (applied to 16‑value blocks) with a tensor‑level FP32 scale. This enables hardware‑accelerated quantization with markedly lower error rates than conventional FP4. In practice, NVFP4 can deliver near FP8‑equivalent accuracy in many AI workloads while reducing memory footprint by about 1.8× compared with FP8 and up to about 3.5× versus FP16. The NVFP4 compute capability on Blackwell Ultra provides a substantial uplift in throughput and efficiency, especially for low‑precision inference and large‑batch pre‑training. The Ultra variant pushes NVFP4 performance to around 15 petaFLOPS, up from roughly 10 petaFLOPS for the base Blackwell design. The architecture remains CUDA‑friendly, ensuring developers can leverage the CUDA ecosystem while benefiting from the enhanced compute and memory capabilities. Blackwell Ultra includes 288 GB of HBM3e memory per GPU—an increase that is 3.6× larger than H100 on‑package memory and about 50% larger than Blackwell—designed to host trillion‑parameter models, extend context length, and enable high‑throughput concurrent inference without extensive KV‑cache offloading. Beyond compute, the architecture introduces specialized engines for modern AI workloads that process multimodal data. A second‑generation Transformer Engine and fifth‑generation Tensor Cores deliver higher throughput and lower latency for dense and sparse workloads. Attention processing, a critical component of modern transformers, benefits from doubled SFU throughput for key attention instructions, enabling up to 2× faster attention‑layer compute compared with Blackwell. This improvement compounds with NVFP4 precision and memory capacity to deliver step‑function gains for long context windows and reasoning models. The result is faster inference with larger context, more concurrent model instances, and improved latency for large‑scale tasks. Blackwell Ultra supports fifth‑generation NVIDIA NVLink for GPU‑to‑GPU communication over NVLink Switch, NVLink‑C2C for coherent interconnect to an NVIDIA Grace CPU, and a PCIe Gen 6 x16 interface for host connectivity. The generation‑to‑generation interconnects enable scalable, low‑latency data movement across the data center, from node to rack scale. Table 2 in NVIDIA’s coverage highlights the architectural leap in transistor count, memory capacity, interconnect bandwidth, and precision throughput, illustrating how Blackwell Ultra scales for AI factories at node and rack scale. The Blackwell Ultra platform, including its interoperability with the CUDA ecosystem, is designed to enable AI applications that were previously computationally prohibitive, from training at scale to real‑time inference in production environments. In addition to the chip‑level advances, Blackwell Ultra is positioned as a cornerstone of NVIDIA’s AI infrastructure. The architecture is described as the backbone of next‑generation AI infrastructure, delivering transformative performance, efficiency, and scale—from desktop superchips to full AI factory racks. The combination of dual‑die integration, NVFP4 acceleration, massive memory capacity, and advanced interconnect technology makes Blackwell Ultra well suited for large‑scale AI deployments. NVIDIA notes that the architecture is designed to support production AI workloads at unprecedented scale, enabling trillions of tokens and multimodal workflows. For developers and system architects seeking deeper detail, NVIDIA makes available a technical brief that maps silicon features to system‑level outcomes and provides a silicon‑to‑system narrative for Blackwell Ultra. You can read NVIDIA’s in‑depth coverage here: https://developer.nvidia.com/blog/inside-nvidia-blackwell-ultra-the-chip-powering-the-ai-factory-era/. Overall, Blackwell Ultra embodies a pragmatic, scalable path to AI factory deployments: strong compute, abundant on‑chip memory, and high‑bandwidth interconnects that collectively lower latency and improve throughput per watt. The CUDA compatibility ensures existing CUDA‑centric workflows can transition to the Blackwell Ultra platform while taking advantage of its specialized AI accelerators and memory innovations. For anyone evaluating AI factory infrastructure, Blackwell Ultra represents a holistic approach that aligns silicon advances with system capabilities to reduce the total cost of ownership while enabling more ambitious AI workloads at scale. NVIDIA’s coverage invites readers to explore the full silicon‑to‑system story and the implications for trillions‑token‑era deployments. References and deeper materials are available at the NVIDIA Dev Blog linked above. Notes: The content summarized here derives directly from NVIDIA’s description of Blackwell Ultra and its features. The article emphasizes dual‑die integration, NVFP4 acceleration, massive memory capacity, and interconnect innovations as enabling factors for AI factories.

More resources

CUDA Toolkit 13.0 for Jetson Thor: Unified Arm Ecosystem and More

Unified CUDA toolkit for Arm on Jetson Thor with full memory coherence, multi-process GPU sharing, OpenRM/dmabuf interoperability, NUMA support, and better tooling across embedded and server-class targets.

Cut Model Deployment Costs While Keeping Performance With GPU Memory Swap

Leverage GPU memory swap (model hot-swapping) to share GPUs across multiple LLMs, reduce idle GPU costs, and improve autoscaling while meeting SLAs.

Improving GEMM Kernel Auto-Tuning Efficiency with nvMatmulHeuristics in CUTLASS 4.2

Introduces nvMatmulHeuristics to quickly select a small set of high-potential GEMM kernel configurations for CUTLASS 4.2, drastically reducing auto-tuning time while approaching exhaustive-search performance.

Make ZeroGPU Spaces faster with PyTorch ahead-of-time (AoT) compilation

Learn how PyTorch AoT compilation speeds up ZeroGPU Spaces by exporting a compiled model once and reloading instantly, with FP8 quantization, dynamic shapes, and careful integration with the Spaces GPU workflow.

Fine-Tuning gpt-oss for Accuracy and Performance with Quantization Aware Training

Guide to fine-tuning gpt-oss with SFT + QAT to recover FP4 accuracy while preserving efficiency, including upcasting to BF16, MXFP4, NVFP4, and deployment with TensorRT-LLM.

How Small Language Models Are Key to Scalable Agentic AI

Explores how small language models enable cost-effective, flexible agentic AI alongside LLMs, with NVIDIA NeMo and Nemotron Nano 2.