Car-GPT: Could LLMs finally make self-driving cars happen?

Sources: https://thegradient.pub/car-gpt, https://thegradient.pub/car-gpt/, The Gradient

Overview

Autonomous driving has long toggled between a modular software stack (Perception, Localization, Planning, Control) and the allure of End-To-End learning. The Gradient article Car-GPT surveys how large language models (LLMs) might help bridge perception, planning, and generation by interpreting and acting on prompts that describe scenes, intentions, or future scenarios. The piece is careful to frame this as early research: there’s potential, but it’s not yet proven in live driving. The author opens with an analogy to Penicillin’s accidental discovery to illustrate how surprising, useful insights can emerge from data-driven systems when traditional, well-trodden methods fail. In autonomous driving, a similar pivot could come from LLMs that reason about perception outputs, query an image or video scene, and propose or critique driving actions. The article notes that the first wave of papers mentioning LLMs in self-driving cars appeared around mid‑2023, and it surveys a broad landscape of approaches, from perception enhancements to planning and generation. Three active research directions are highlighted: (1) Perception, where the input is images (or BEV views) and the output includes detections, lanes, and other scene annotations; (2) Planning, where the system suggests or optimizes a path from A to B; and (3) Generation, where language-augmented models create descriptions, predictions, or even future scenes to improve data collection and planning. In this framing, LLMs do not replace all automotive software; instead they augment existing modules with language-based reasoning, task-specific prompts, and data-driven careful inferences. The article describes several families of models and representative works that illustrate where LLMs can contribute:

- Detection, Prediction, and Tracking in Perception with models like HiLM-D and MTD-GPT;

- BEV (Bird Eye View) perception and prompt-driven analysis via systems such as Talk2BEV, which combines BEV inputs with LLM‑driven reasoning;

- The use of prompts to guide a perception output (for example, prompting an LLM to crop regions or focus attention and then predict a path);

- Planning approaches that produce driving trajectories by leveraging language-enabled reasoning; and

- Generation approaches that blur the line between perception and scene synthesis, including models that generate videos from text and images (GAIA-1 by Wayve) or scene generation from driving data (MagicDrive, Driving Into the Future, Driving Diffusion). The article emphasizes three core tasks that align with LLM use in driving: Detection (what’s in the scene), Prediction (how objects will move), and Tracking (maintaining consistent IDs across time). It then describes how these outputs can be enhanced or reinterpreted by an LLM to produce actionable driving decisions or richer scene understanding. The author raises practical concerns: the risk of hallucinations, the black‑box nature of large models, and the challenge of making such systems reliable enough for live driving. While some researchers argue for a Grok-like model on a vehicle that can answer questions about the current scene, the piece stresses that it is still very early days. Acknowledging both promise and risk, the article suggests that researchers should build a careful, evidence-driven experimental path and review evolving literature as the field matures. Overall, Car-GPT frames LLMs as a potential component in a multi‑modal, human‑in-the-loop, data‑driven autonomous driving stack—most plausibly as a set of complementary capabilities that enhance perception, planning, and generation rather than as a drop-in replacement for existing systems.

Key features

- Three usage families for LLMs in self-driving: Perception, Planning, Generation. Perception includes detecting and describing objects, lanes, and scene semantics; Planning uses language‑informed reasoning to propose or refine trajectories; Generation produces descriptions or simulations of driving scenarios.

- BEV and multi-view inputs: several approaches emphasize Bird Eye View representations and the integration of multi-view imagery with LLM reasoning to produce prompts like “find the vehicles that are turning right.”

- Language-augmented perception: LLMs add a prompt-driven layer that enhances traditional perception outputs with natural language explanations, questions, or follow-up analyses.

- Prompts as actionable levers: prompts guide where to crop or examine regions and how to interpret detections to drive planning decisions.

- 4D perception and IDs: systems such as PromptTrack seek to assign unique IDs to objects across frames, enabling consistent tracking in a language‑assisted pipeline.

- Planning via language models: examples include Talk2BEV, which uses language-enhanced BEV maps to inform planning decisions, potentially in conjunction with models like LLaVA or GPT‑class systems.

- Generation and world models: GAIA‑1 demonstrates a pipeline where text and images drive a world model that can produce video; other projects (MagicDrive, Driving Into the Future, Driving Diffusion) generate future scenarios from current data.

- Not a slam-dunk replacement: the article emphasizes that LLMs’ strength lies in flexible reasoning and prompting, not in replacing deterministic, modular control pipelines—trust, accountability, and real‑world reliability remain critical concerns.

- Early-stage and evolving: the first wave of related papers appeared around mid‑2023; the community is still probing how, when, and where LLMs provide value in driving, including questions about latency, safety, and verification. | Area | What LLMs add | Evidence from article |---|---|---| | Perception | Detection, association across frames, language-guided description | Mentions HiLM-D, MTD-GPT, and PromptTrack concepts |Planning | Language-augmented BEV maps, region-focused prompts, trajectory suggestion | Talk2BEV and related planning work |Generation | Scene/video generation from perception or prompts | GAIA-1, MagicDrive, Driving Into the Future, Driving Diffusion |Trust & verification | Attempts to improve transparency; raises questions about determinism | Discussion of “black box” concerns and live‑use challenges |

Common use cases

- Perception enhancement: using LLMs to interpret perception outputs, annotate scenes, and describe objects, lanes, and spatial relations; enabling a richer human‑machine interface.

- Planning augmentation: leveraging prompts to guide planning decisions, crop attention to specific regions, and co‑create driving trajectories with the base perception model.

- Generation and data synthesis: producing new driving scenarios or future scenes to augment training data and test planning under diverse conditions; examples include GAIA‑1 generating videos from text and images, and MagicDrive generating scenes conditioned on perception outputs.

- Q&A and troubleshooting: envisioning a Grok-like Q&A role on vehicles (e.g., answering questions about the current scene) as a potential use, though live deployment remains unproven.

- Data loop for training: using language-enabled pipelines to create or annotate data, potentially improving perception, tracking consistency, and planning plausibility through iterative feedback.

Setup & installation

Not specified in the source article. The content is conceptual and surveys a research landscape rather than providing installation or integration instructions.

N/AQuick start

The article provides conceptual uses rather than a turnkey implementation. A minimal, illustrative workflow inspired by the text could be:

# Conceptual quick start inspired by the article

# Given a BEV map and object detections from a perception module

perception_output = {

'bev': '...BEV representation...',

'objects': [ {'id': 1, 'type': 'vehicle', 'direction': 'left', 'speed': 25}, ... ]

}

llm_prompt = (

"Given the BEV map and detected objects, identify hazards and propose a safe driving action. "

"If useful, crop front-left region and re-evaluate."

)

# Conceptual LLM runner; replace with your actual LLM API

llm_response = LLM_run(model='gpt-4', prompt=llm_prompt, inputs=perception_output)

print(llm_response)This mirrors the article’s idea that prompts can guide perception outputs toward planning decisions, e.g., asking the model to find vehicles turning right or to focus on particular regions.

Quick start (minimal runnable example)

- Step 1: Run a perception module to produce a BEV map and detections.

- Step 2: Prompt an LLM to interpret the outputs and suggest safe actions, optionally cropping regions for closer inspection.

- Step 3: Use the LLM’s output to inform a planning module or to generate a narrative explanation of the scene.

Pros and cons

- Pros

- Flexibility: LLMs offer language-based reasoning that can interpret and augment perception outputs, potentially facilitating richer human‑machine interaction.

- Prompt-driven control: Prompts enable dynamic adjustment of attention, focus areas, and analysis without retraining models.

- Data generation and simulation: Generation models can create new scenarios for training and testing planning components.

- Interpretability in practice: While not a silver bullet, language-based reasoning can provide a traceable narrative around decisions.

- Cons

- Hallucinations and reliability: The article highlights that LLMs can produce absurd or erroneous outputs and that trust is still a major open question.

- Black box and determinism: There are concerns about determinism and debugging in a live vehicle environment.

- Live deployment challenges: The first wave of research is early, with limited evidence of online, fielded use.

- Latency and safety: Real‑time driving requires low latency and robust safety guarantees, which are not yet established for LLM-augmented stacks.

Alternatives

The landscape contrasts modular pipelines with End-To-End learning. The article notes these contrasts in a concise way: | Approach | Strengths | Weaknesses |---|---|---| | Modular (perception + planning + control) | Clear separations of responsibility; testability; easier debugging | Requires reliable integration between modules; potential latency from handoffs |End-To-End | Simpler pipeline; potential performance gains from joint optimization | Black‑box behavior; difficult to interpret or verify; safety concerns |LLM-augmented (perception/planning/generation) | Adds reasoning, prompts, and data-driven flexibility; potential for improved human interaction | Early stage; trust and safety questions; live deployment unproven |

Pricing or License

Not specified in the source. No pricing or licensing details are provided for the concepts discussed.

References

More resources

CUDA Toolkit 13.0 for Jetson Thor: Unified Arm Ecosystem and More

Unified CUDA toolkit for Arm on Jetson Thor with full memory coherence, multi-process GPU sharing, OpenRM/dmabuf interoperability, NUMA support, and better tooling across embedded and server-class targets.

Cut Model Deployment Costs While Keeping Performance With GPU Memory Swap

Leverage GPU memory swap (model hot-swapping) to share GPUs across multiple LLMs, reduce idle GPU costs, and improve autoscaling while meeting SLAs.

Fine-Tuning gpt-oss for Accuracy and Performance with Quantization Aware Training

Guide to fine-tuning gpt-oss with SFT + QAT to recover FP4 accuracy while preserving efficiency, including upcasting to BF16, MXFP4, NVFP4, and deployment with TensorRT-LLM.

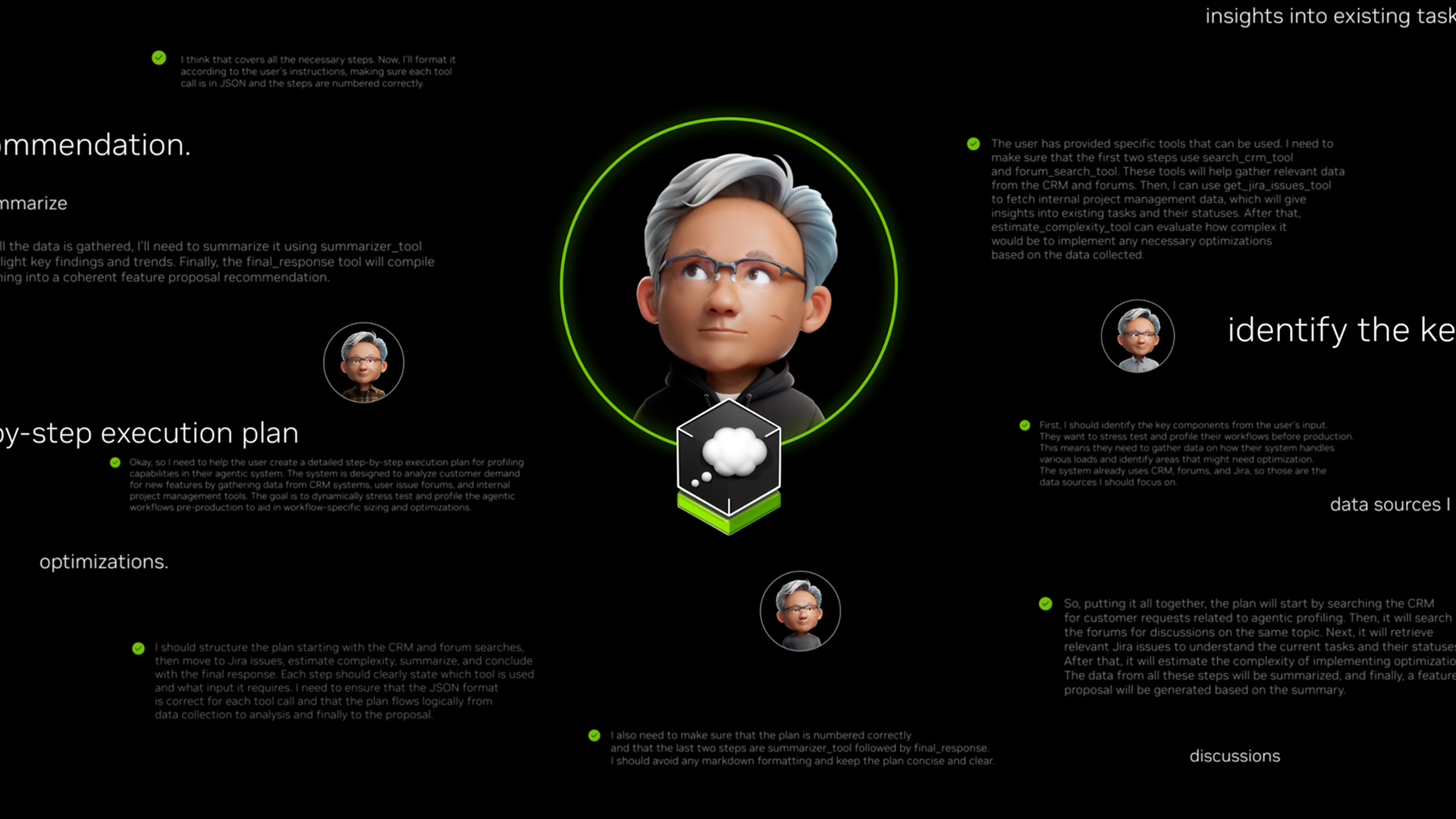

How Small Language Models Are Key to Scalable Agentic AI

Explores how small language models enable cost-effective, flexible agentic AI alongside LLMs, with NVIDIA NeMo and Nemotron Nano 2.

Getting Started with NVIDIA Isaac for Healthcare Using the Telesurgery Workflow

A production-ready, modular telesurgery workflow from NVIDIA Isaac for Healthcare unifies simulation and clinical deployment across a low-latency, three-computer architecture. It covers video/sensor streaming, robot control, haptics, and simulation to support training and remote procedures.

How to Scale Your LangGraph Agents in Production From a Single User to 1,000 Coworkers

Guidance on deploying and scaling LangGraph-based agents in production using the NeMo Agent Toolkit, load testing, and phased rollout for hundreds to thousands of users.