Meet Boti: AI assistant transforming how Buenos Aires residents access government information with Amazon Bedrock

Sources: https://aws.amazon.com/blogs/machine-learning/meet-boti-the-ai-assistant-transforming-how-the-citizens-of-buenos-aires-access-government-information-with-amazon-bedrock, https://aws.amazon.com/blogs/machine-learning/meet-boti-the-ai-assistant-transforming-how-the-citizens-of-buenos-aires-access-government-information-with-amazon-bedrock/, AWS ML Blog

TL;DR

- The Government of the City of Buenos Aires partnered with AWS GenAIIC to build an agentic AI assistant using LangGraph and Amazon Bedrock that answers questions about government procedures.

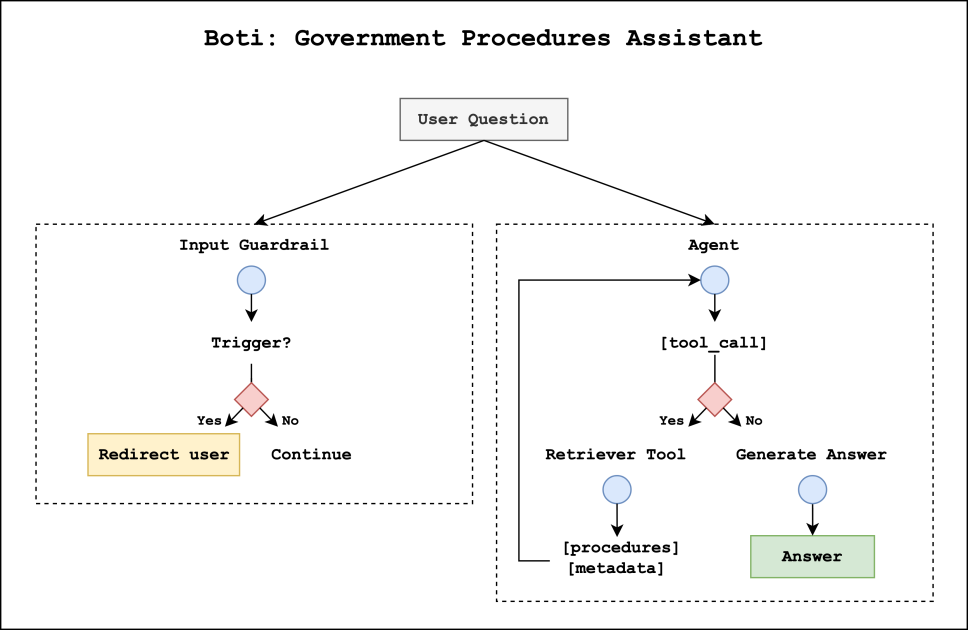

- The solution features a dual design: an input guardrail system that classifies user queries and a government procedures agent that retrieves and composes responses.

- A novel reasoning retrieval flow uses comparative summaries to disambiguate similar procedures, yielding up to 98.9% top-1 retrieval accuracy and a 12.5–17.5% uplift over standard retrieval-augmented generation (RAG) methods.

- Guardrails blocked 100% of harmful queries in evaluation; subject-matter experts reported 98% voseo accuracy and 92% periphrastic future accuracy.

- The project scales Boti’s reach beyond the 3 million monthly conversations it already handles via WhatsApp and links citizens to one of over 1,300 government procedures.

Context and background

The Government of the City of Buenos Aires has a long history of using AI assistants to assist residents. Since February 2019, Boti has enabled citizens to access a wide range of city information via WhatsApp, including renewals for driver’s licenses, healthcare services, and cultural events. This channel has become a preferred conduit for public information, currently facilitating more than 3 million conversations each month. As Boti’s usage grows, the city sought to enhance the conversational experience by leveraging the latest developments in generative AI to answer questions about government procedures directly and guide users to the correct procedure. To pilot a more capable assistant, the city partnered with the Amazon Web Services Generative AI Innovation Center (GenAIIC). Together, the teams built an agentic AI system that combines LangGraph and Amazon Bedrock. The implementation centers on two primary components: an input guardrail system and a government procedures agent. The guardrail relies on a custom LLM classifier to assess incoming queries and determine whether to approve or block requests. Approved requests proceed to the government procedures agent, which retrieves relevant procedural information and crafts responses in Boti’s distinctive voice—short, helpful, and expressive, in Rioplatense Spanish. The Government of the City of Buenos Aires maintains more than 1,300 government procedures, each with its own logic, nuances, and exceptions. The complexity of this landscape motivated the project’s focus on disambiguation and precise retrieval to avoid incorrect responses. For the knowledge base and inference, the teams use Amazon Bedrock Knowledge Bases and the Bedrock Converse API to run LLMs. The solution was evaluated for safety, usefulness, and linguistic adequacy in Rioplatense Spanish, emphasizing voseo (vos instead of tú) and the periphrastic future (ir a before the verb).

What’s new

The new agentic AI system comprises two main components that work in parallel at question time. First, the input guardrail system analyzes each user query to decide whether it is appropriate for on-topic processing. If the query is deemed harmful, the graph execution is stopped and the user is redirected to ask questions about government procedures in a safe way. If approved, the government procedures agent takes over to generate a response. Second, the government procedures agent can call a retrieval tool to obtain relevant context and metadata from government procedures stored in Amazon Bedrock Knowledge Bases, enabling precise, up-to-date answers. The system uses the Amazon Bedrock Converse API for LLM inference, taking advantage of a broad set of LLMs to optimize performance and latency across different subtasks. A key innovation is the reasoning retrieval approach designed to disambiguate closely related procedures. The process begins with the creation of a government procedures knowledge base built from base procedure information (purpose, audience, costs, steps, requirements) and a set of comparative summaries that describe what distinguishes each procedure from its neighbors. These summaries are clustered into small groups (average cluster size ~5) and enriched by an LLM-generated description of the differences. The result is a final, discrimination-rich summary appended to the base information. This approach shares similarities with Anthropic’s Contextual Retrieval, which prepends explanatory context to document chunks. With the knowledge base in place, the reasoning retriever operates in three steps: it receives the retrieved full-text procedures, follows a chain-of-thought style process to determine relevant content and URL attributions, and then uses the chosen context to generate the final answer. In evaluations against a synthetic dataset of 1,908 questions drawn from known procedures, the reasoning retriever markedly outperformed standard RAG methods in top-k retrieval accuracy. The evaluation outcome showed that Cohere Multilingual embeddings, combined with a reasoning step using Claude/Haiku models on Bedrock, yielded particularly strong results across multiple top-k metrics. Additionally, the system’s retrieval configurations were validated against a large-scale benchmark. The first three approaches—Section Titan, Summaries Titan, and Summaries Cohere—represent standard vector-based retrieval. The authors observed that embedding procedure summaries (Summaries Titan and Summaries Cohere) improved retrieval accuracy by 7.8–15.8% over section-based chunking. The Cohere Multilingual embedding model produced a notable uplift with all top-k values above 90%. The subsequent reasoning-retriever configurations achieved up to 98.9% top-1 retrieval accuracy, representing a 12.5–17.5% improvement over standard RAG. In terms of linguistic performance, subject-matter experts found Boti’s responses to be accurate for voseo usage 98% of the time and correct in periphrastic future usage 92% of the time. The evaluation highlights not just factual accuracy but also fluency and dialectical appropriateness in Rioplatense Spanish.

Why it matters (impact for developers/enterprises)

This implementation demonstrates how a city government can scale a conversational AI system to manage a large catalogue of procedures while maintaining safety and accuracy. The guardrail design—customized, language-specific, and content-aware—illustrates how organizations can tailor LLM-enabled systems to address local norms and languages without sacrificing safety. By integrating LangGraph with Bedrock, developers can build agentic assistants that combine robust retrieval, precise disambiguation, and context-aware generation. For enterprises, the project showcases a practical architecture: a dual-layer system that protects users from harmful content while enabling complex information retrieval over a large, structured knowledge base. The conditioning of responses in a dialect and the explicit handling of disambiguation through a reasoning retriever offer a blueprint for building domain-specific assistants that require nuanced retrieval and precise policy-compliant outputs. The solution also emphasizes real-world impact: user-oriented design that delivers quick, relevant answers, with safe, guided escalation when queries fall outside the on-topic scope. By leveraging Bedrock Knowledge Bases and the Bedrock Converse API, organizations can integrate scalable LLM inference with a curated, government-grade knowledge base.

Technical details or Implementation

- System architecture: two parallel tracks at query time—input guardrail and government procedures agent. The guardrail assigns a primary category (approved or blocked) and a subcategory to each query; approved queries proceed to the agent, while blocked queries are redirected to safe topics.

- Input guardrail: a custom LLM classifier trained to detect harmful content, including offensive language, harmful opinions, prompt injection attempts, and unethical behavior. In evaluation, the guardrail blocked 100% of harmful queries, with some normal queries occasionally flagged as harmful, representing a conservative safety posture.

- Government procedures agent: uses a retrieval tool within a retrieval-augmented generation (RAG) framework to fetch context from Bedrock Knowledge Bases and generate responses in Rioplatense Spanish.

- Knowledge base construction: base procedure metadata (purpose, audience, costs, steps, requirements) plus comparative summaries describing differences between procedures. Summaries are clustered (~5 per group) and enriched with distinguishing descriptions via an LLM to form the final knowledge base entries. This approach mirrors contextual retrieval concepts but is implemented as a custom solution to fit local needs.

- Reasoning retriever: after initial retrieval, the agent applies its own chain-of-thought style reasoning to identify the exact procedures and URL sources to cite, ensuring traceability of the answer.

- Retrieval models and evaluation: standard methods included Titan-based chunking (250 words per chunk), Summaries Titan, and Summaries Cohere embeddings. Cohere Multilingual embeddings yielded strong performance (top-k values >90%). The reasoning retriever used Cohere embeddings plus LLMs (Anthropic Haiku 3, Claude 3 Sonnet, Claude 3.5 Sonnet) on Bedrock to select the most relevant results, achieving up to 98.9% top-1 accuracy and a 12.5–17.5% improvement over baseline RAG.

- Inference and latency: both the input guardrail and government procedures agent rely on the Bedrock Converse API for LLM inference, enabling optimized performance across subtasks.

- Evaluation and language fidelity: experts measured 98% voseo accuracy and 92% periphrastic future accuracy, and a structured safety assessment confirmed effective blocking of harmful content while preserving helpful, topic-aligned responses.

Key takeaways

- A dual-layer AI system (guardrails + procedure agent) can safely scale to many government procedures while preserving user experience.

- Custom guardrails tailored to locale and language can achieve strong safety performance without unduly constraining helpful dialogue.

- A reasoning retrieval flow that uses comparative summaries improves disambiguation and retrieval accuracy for complex policy domains.

- Bedrock Knowledge Bases + Bedrock Converse API enable integrated, scalable LLM inference for government information tasks.

- Rioplatense Spanish dialect features like voseo and periphrastic future can be effectively incorporated into AI responses with high accuracy.

FAQ

-

What problem does Boti address?

It helps citizens access information about government procedures by answering questions and guiding users to the correct procedure.

-

How does the input guardrail work?

It uses a custom LLM classifier to decide whether a query is approved or blocked; approved queries proceed to the government procedures agent, while blocked queries are redirected.

-

What is the reasoning retriever and why is it used?

The reasoning retriever uses comparative summaries and LLM-based selection to disambiguate procedures and identify the most relevant results for a given question.

-

What performance results were observed?

The guardrails blocked 100% of harmful queries in evaluation, and the reasoning retriever achieved up to 98.9% top-1 retrieval accuracy, with a 12.5–17.5% improvement over standard RAG. Experts reported 98% voseo accuracy and 92% periphrastic future accuracy.

-

What technologies underpin the solution?

LangGraph, Amazon Bedrock, Bedrock Knowledge Bases, and the Bedrock Converse API were used to build and run the agent.

References

- AWS ML Blog: Meet Boti: The AI assistant transforming how the citizens of Buenos Aires access government information with Amazon Bedrock. https://aws.amazon.com/blogs/machine-learning/meet-boti-the-ai-assistant-transforming-how-the-citizens-of-buenos-aires-access-government-information-with-amazon-bedrock/

More news

Move AI agents from proof of concept to production with Amazon Bedrock AgentCore

A detailed look at how Amazon Bedrock AgentCore helps transition agent-based AI applications from experimental proof of concept to enterprise-grade production systems, preserving security, memory, observability, and scalable tool management.

Prompting for precision with Stability AI Image Services in Amazon Bedrock

Amazon Bedrock now offers Stability AI Image Services, extending Stable Diffusion and Stable Image with nine tools for precise image creation and editing. Learn prompting best practices for enterprise use.

Monitor Amazon Bedrock batch inference using Amazon CloudWatch metrics

Learn how to monitor and optimize Amazon Bedrock batch inference jobs with CloudWatch metrics, alarms, and dashboards to improve performance, cost efficiency, and operational oversight.

Scale visual production using Stability AI Image Services in Amazon Bedrock

Stability AI Image Services are now available in Amazon Bedrock, delivering ready-to-use media editing via the Bedrock API and expanding on Stable Diffusion models already in Bedrock.

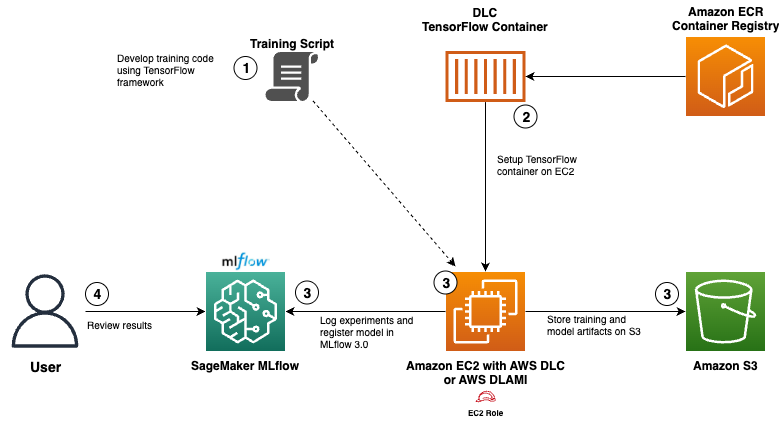

Use AWS Deep Learning Containers with Amazon SageMaker AI managed MLflow

Explore how AWS Deep Learning Containers (DLCs) integrate with SageMaker AI managed MLflow to balance infrastructure control and robust ML governance. A TensorFlow abalone age prediction workflow demonstrates end-to-end tracking, model governance, and deployment traceability.

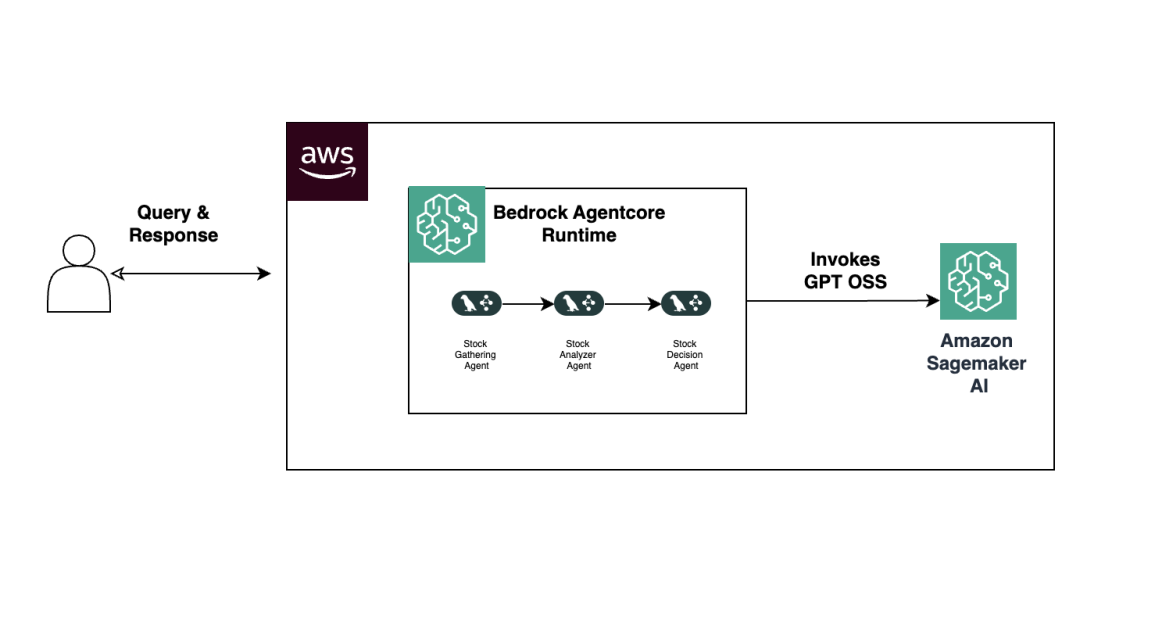

Build Agentic Workflows with OpenAI GPT OSS on SageMaker AI and Bedrock AgentCore

An end-to-end look at deploying OpenAI GPT OSS models on SageMaker AI and Bedrock AgentCore to power a multi-agent stock analyzer with LangGraph, including 4-bit MXFP4 quantization, serverless orchestration, and scalable inference.